Profiling Iterative Optimization Heuristics

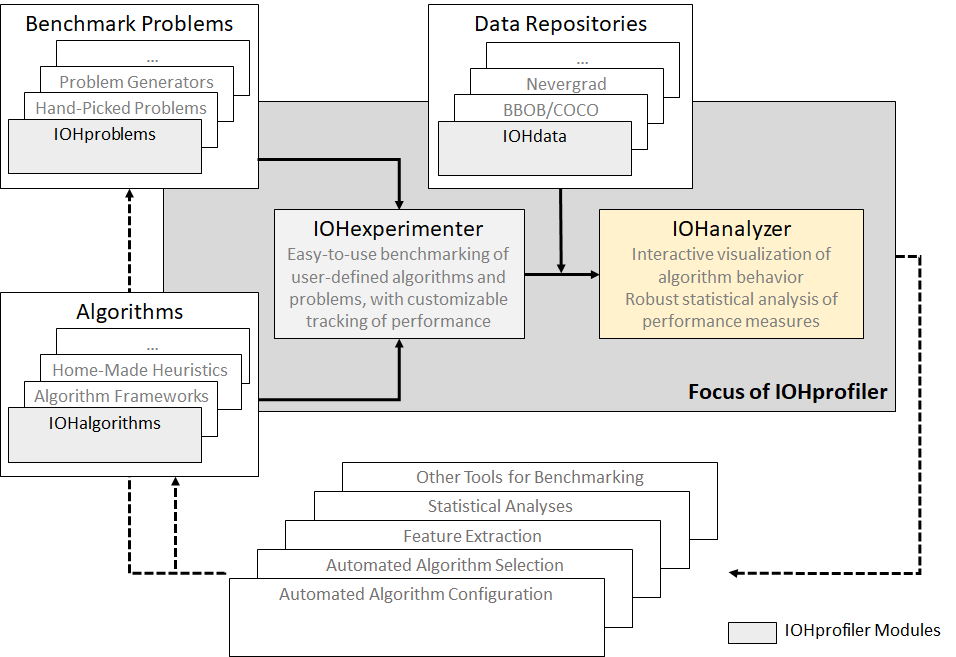

IOHprofiler, a benchmarking platform for evaluating the performance of iterative optimization heuristics (IOHs), e.g., Evolutionary Algorithms and Swarm-based Algorithms. We aim to the integrate various elements of the entire benchmarking pipeline, ranging from problem (instance) generators and modular algorithm frameworks over automated algorithm configuration techniques and feature extraction methods to the actual experimentation, data analysis, and visualization. It consists of the following major components:

- IOHexperimenter for generating benchmarking suites, which produce experiment data,

- IOHanalyzer for the statistical analysis and visualization of the experiment data,

- IOHproblem for providing a collection of test functions.

- IOHdata for hosting the benchmarking data sets from IOHexperimenter as well as other platforms, e.g., BBOB/COCO and Nevergrad.

- IOHalgorithm for efficient implemention of various classic optimization algorithms.

The composition of IOHprofiler and the coordinations of its components are depicted below:

IOHexperimenter provides,

- A generic framework to generate benchmarking suite for the optimization task you’re insterested in.

- A Pseudo-Boolean Optimization (PBO) benchmark suite, containing 25 test problems of the kind $f\colon \{0,1\}^d \rightarrow \mathbb{R}$.

- The integration of 24 Black-Box Optimization Benchmarking (BBOB) functions on the continuous domain, namely $f\colon \mathbb{R}^d \rightarrow \mathbb{R}$.

IOHanalyzer provides:

- Performance analysis in both a fixed-target and fixed-budget perspective

- A web-based interface to interactively analyze and visualize the empirical performance of IOHs.

- Statistical evaluation of algorithm performance.

Rprogramming interfaces in the backend for even more customizable analysis.

Documentations

In addition to this wiki page, there are a number of (more) detailed documentations to explore:

- General: https://arxiv.org/abs/1810.05281

- IOHanalyzer: https://dl.acm.org/doi/abs/10.1145/3510426

- IOHexperimenter: https://arxiv.org/abs/2111.04077

Links

- Project repositories:

- Main repository: https://github.com/IOHprofiler

- Algorithms: https://github.com/IOHprofiler/IOHalgorithm

- Performance data: (for the time being, these are available via the web-interface at http://iohprofiler.liacs.nl, or at https://github.com/IOHprofiler/IOHdata)

- IOHanalyzer Online Service: https://iohanalyzer.liacs.nl

- Bug reports:

- IOHanalyzer: https://github.com/IOHprofiler/IOHanalyzer/issues

- IOHexperimenter: https://github.com/IOHprofiler/IOHexperimenter/issues

- General Contact: iohprofiler@liacs.leidenuniv.nl

- Mailing List: https://lists.leidenuniv.nl/mailman/listinfo/iohprofiler

Links

GitHub Page

Email us

License

BSD 3-Clause

Cite us

Citing IOHprofiler

Developers

Diederick Vermetten

Jacob de Nobel

Furong Ye

Hao Wang

Ofer M. Shir

Carola Doerr

Thomas Bäck